Benchmarking Version Control Solutions for Creative Collaboration

- Article

- 1 Oct 2024

- 10 min read

Blender Studio has used SVN (subversion) to version control short film productions since Elephants Dream. However, we are now looking into alternatives for the following reasons:

- Most open source projects have now fully phased out SVN, so support for it is declining.

- It is hard to find any maintained, free and open source front end for SVN that is available on Windows, Mac, and Linux. This is important as a lot of artists are not savvy with terminals, so using the official command line tools is a challenge for them.

- For quite some time now, we have had connection and speed issues with our SVN servers. Leading to very tedious checkout/clone workflow where the artists might have to retry multiple times to get a complete clone of the project.

- As SVN is not a distributed version control system, it makes it hard for artists to be a bit more spontaneous in how they create their projects.

The base requirements for a viable alternative are quite simple:

- Free and open source

- Well maintained with good multi-platform support

- Able to handle big binaries files nicely. Note though that we only expect linear workflows to work well. So for example branching and merging branches is not a workflow we are looking for.

- Have nice GUI clients available on Windows, Mac, and Linux.

With this in mind, the only two seemingly viable alternatives are Mercurial and GIT. While both Mercurial and GIT have Large File Support (LFS), I decided to check how bad a vanilla GIT or Mercurial repo would be before moving onto testing the LFS plugins.

Small Project Test

For my initial test I started with a smaller project (project Snow) that had 1324 commits and where the SVN server repository size was quite small (less than 40 GB). Nearly all commits consist of binary files of some sort (.blend files, images etc.). All .blend files were saved uncompressed to have as small binary diffs between file revisions as possible.

Here is the disk usage data I gathered from this test:

SVN server size: 38.7 GB

SVN local checkout size: 26.5 GB

Git server size: 24.8 GB

Git local checkout size: 37.7 GB

Mercurial server size: 27.9 GB

Mercurial local checkout size: 42.1 GB

To my surprise, both GIT and Mercurial managed to beat SVN on the server side as the repositories for them were smaller than the SVN server repository size! I thought that the binary diffing SVN did would be more space efficient on large binary files, but it seems like I was wrong. Git compressed a bit better than Mercurial, but I'm guessing that they are using a similar approach to compressing the stored file history.

On the local checkout side, we can see that the SVN checkout is smaller. However, we should note that both the Git and Mercurial checkouts contains the whole history while the SVN checkout is a so-called "shallow" clone. This means that the SVN clone only contains data from the most recent commit.

If I do a similar shallow clone with Git that contains only the most recent commit, the local checkout size was 19.4 GB which is smaller than the local SVN checkout.

Why is this? SVN stores all files from the accessible local commits uncompressed. Which means that the local checkout is at least always twice the size of all tracked files from the current commit. It is only on the server side that it stores any binary diffs.

Git (and Mercurial as well) always compresses the tracked files and tries to do some clever space savings by checking if there is any data overlap between any of the stored files.

Git LFS, on the other hand, stores the large binary files it tracks the same way as SVN (uncompressed). This also applies to the server side as well though. This has some upsides as we will see later...

Large Project Stress Test

While the initial results were very promising, I wanted to check if regular Git and Mercurial also performed well on larger projects. Therefore, I switched to a bigger project (project Gold) with over 3000 commits and where the SVN server repo size was around 180 GB at the time of testing.

I quickly ran into a showstopper with Mercurial, as I got the following error message: size of 2152217417 bytes exceeds maximum revlog storage of 2GiB. I couldn't find a solution for this with my Google-Fu. If there is a solution, I assume that Mercurial and GIT will probably perform almost the same for our use cases. So all testing from this point onwards was only done with SVN and GIT.

On the Git side, I could successfully convert the project. Sadly, I had to add some parameters to make sure that I didn't run out of RAM when git does its repacking. If I didn't use the window-memory=512m setting, it would eat all 32 GB of my computer's RAM. I also tried to tweak some of the compression settings, but they didn't seem to help with speed or RAM usage.

("Repacking" is something that Git does to make sure that all commits are compressed and stored in a somewhat optimal way)

Thankfully, even if the repacking process would be killed by the Out of Memory Killer, it wouldn't stop the conversion process that I had kicked off to convert the repo from SVN to Git. This is because the repacking job is started as a background process. You can still continue to add new commits and work on the repo before it finishes. However, the repacking not being able to finish made the final repo size bigger and git clone would fail until it had been properly repackaged.

In addition to this, I also noticed that it took quite a long time to do git add on large files which, besides the repacking problem, also would be quite annoying for artists to deal with. For large >3 GB files, it could take around a minute or so for the git add operation to finish. (Though I did notice that the operation didn't seem to be multithreaded, so perhaps it is something that could be improved.)

Now that the flaws of regular Git started to surface, I wanted to create graphs that would accurately showcase this.

Final test with the Spring short film repo

As the Gold project was still in production, I decided to switch to a similarly sized finished project, so we could easily reproduce the results.

I switched to using the Spring short film as a data set. It also has over 3000 commits and the SVN server repo size for it is 247.9 GB.

To be able to easily gather all the data I wanted, I created a script to keep track of the local checkout and server size in addition to the time it took to create a commit. I also tracked the total size of all files modified or added during a commit.

For the "time to add" I tracked the svn commit command for SVN and for git the git add command. Because all the testing was done on a local file system, the file transfer speed for both of these commands should be the same. Therefore, I think they should be mostly equivalent as we don't do any network transfers.

It should be noted though that for the end user, git add being slow is usually much worse than svn commit being slow as committing multiple files will be much more tedious and time-consuming.

Here are the graphs for SVN and GIT:

Here we can see that, while adding big binary files to Git is slower than SVN, we are still seeing noticeable slowdown with svn commit as well.

Note that the local SVN checkout grows so much in size because there doesn't seem to be any hooks or built-in way to do automatic cleanup. This means that the local repo contains all uncompressed file revisions if you have not run svn cleanup since the first commit to the repo.

The interesting part here is probably the SVN server size. But it will also showcase what would happen if I didn't do any automatic cleanup during the following tests with Git LFS.

The sharp increases and subsequent drops in disk usage of the local Git checkout is because of the automatic git repacking background job starting and ending. The repacking step keeps the older data around until the repacking has finished.

As we can see, regular Git is actually quite competitive with SVN! Nevertheless, I would say that the repacking problem and the long git add times are bad enough to still look for alternatives...

Which leads us to: Git LFS.

Git LFS doesn't do any file diffing and compression, instead it simply uploads the untouched binary files to the Git LFS server. This means that the total size of the Git LFS server repo is the total size of all binary files ever added to the project.

With this in mind, I ran an additional test to see what would happen if I used the built-in file compression in Blender for all .blend files.

We did not do this before to make SVNs binary diffing and the Git repacking more space efficient. Basically, if we compress the .blend files it is almost certain that the binary structure will almost be completely different between revisions. Without compression a lot of data will be the same between revisions and thus diffing and/or third party compression can work more efficiently.

As you can see, Git LFS with compressed .blend files is competitive size wise with both SVN and vanilla Git at around a 50% data compression rate. While the regular Git server has the upper hand by using around 100 GB less in storage, Git LFS makes up for that by being much faster to commit files and to transfer them (as the individual files are smaller).

The sharp drop in the local checkout sizes is because I've added a hook to git to also run git lfs prune when it does any automatic garbage collection. This ensures that the local checkout is mostly shallow as it doesn't keep around all revisions of the big binary files.

Note that the Git LFS server size is omitted as they are almost exactly the same size as the sum of all added files. (You can observe the same thing in the SVN graph with the local .svn size and the total size of all added files)

Here is a summary of all tests. The raw data and scripts are available in the attached zip file at the end of this blog post.

Conclusion

So what are the main takeaways?

Vanilla Git is not as bad as I thought at storing binary files. But there are some showstoppers like the git add performance and the repacking being quite memory heavy when you have big binary files.

Because of this, it seems like Git LFS with .blend file compression is the winner. The server side repository size is just a bit bigger than SVN and Vanilla git, but the time to add and transfer files seems to be better than both SVN and vanilla Git.

A lot of popular Git frontends also come with Git LFS support out of the box nowadays, so it seems to check off all our requirements.

It should be noted though that Git LFS is an add-on for Git. Therefore, it is a bit more tricky to make sure people use it correctly. But I don't think it should be too hard to figure out a scheme to enforce workflows and prevent people from committing binary files not tracked by the LFS system. But more testing is needed... :)

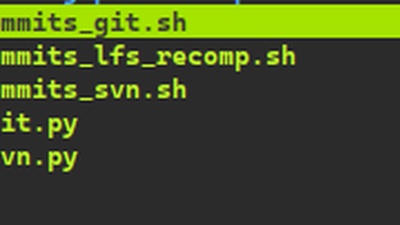

Here is the raw data and script files if anyone wants to take a closer look at them:

5 comments

Great write-up. Still i'm left wondering how to deal with version conflicts with binary (e.g. .blend) files. As to my knowledge they can't be merged easily and thus only one team member should edit and push a file at a time. How do you guys deal with it?

@Cedric Steiert As you stated it is only feasible to have one person working on a .blend file at a time.

At the studio we use a lot of linking and library overrides. This means that even if the geometry/texturing etc is not done for a character our animators can still animate as the geometry is linked into the animation file.

However this still needs a lot of coordination and helper scripts. We are hoping we can improve this workflow further in the future.

I've used git lfs for blender projects with lots and lots of binary assets for a long time. It's been very robust.

One important thing to consider wth git-lfs over "vanilla" git is that with vanilla git you have to download all the versions of the files as they've changed over time. with lfs you get the current one and download other versions only as you need them -- by going back in history.

Much better for the Blender Studio's needs than git-lfs would be git-annex, which precedes git-lfs and is much more powerful and flexible. We use it at work at Spritely to manage many documents, and I use it personally for all my Blender projects, and any other project that requires keeping track of binary data! https://git-annex.branchable.com/

@Christine Lemmer-Webber Interesting! Have you used it on Windows? From reading comments online it seems like windows support is not that great because it relies on symlinks.

Join to leave a comment.